Google’s newly released AI-powered search results are garnering lots of attention—for the wrong reasons. After the tech behemoth announced a host of new AI-powered tools last week as part of a new “Gemini Era,” its trademark web search results changed significantly, with natural language answers to questions displayed above websites.

“In the past year, we’ve answered billions of queries as part of the search-generated experience,” Google CEO Sundar Pichai told the audience. “People are using it to search in entirely new ways and asking new types of questions longer and more complex queries, even searching with photos, and getting back the best the web has to offer.”

The answers, however, can be incomplete, incorrect, or even dangerous—whether wading into eugenics or failing to identify a poisonous mushroom.

Many of the “AI Overview” answers are pulled from social media and even satirical sites where wrong answers were the whole point. Google users have shared countless problematic responses they received from Google’s AI.

When told, “I’m feeling depressed,” Google reportedly said one of the ways to deal with depression was “jumping off the Golden Gate Bridge.”

Another asked, “If I run off a cliff, can I stay in the air so long as I don’t look down?” Citing a cartoon-inspired Reddit thread, Google’s “AI Overview” confirmed the gravity-defying capability.

The strong representation of Reddit threads among the examples follows a deal announced earlier this year that Google would use Reddit’s data to make it easier “to discover and access the communities and conversations people are looking for.” Earlier this month, ChatGPT developer OpenAI similarly announced that it would be licensing data from Reddit.

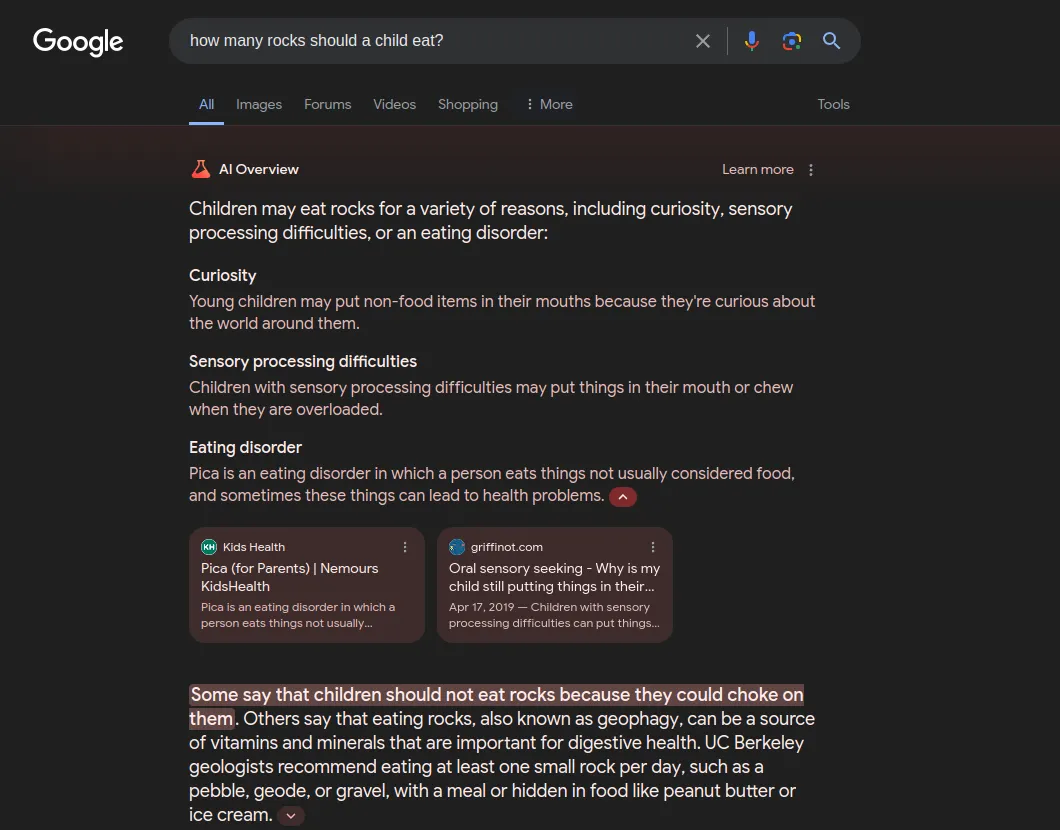

In another instance of absurd answers, a Google user asked, “How many rocks should a child eat?” to which Google’s AI responded with “at least one small rock a day,” citing a “UC Berkeley geologist.”

Many of the most absurd and remarked upon examples have since been removed or corrected by Google. Google did not immediately respond to a request to comment from Decrypt.

An ongoing issue with generative AI models is A penchant to make up answers, or “hallucinate.” Hallucinations are reasonably characterized as lies because the AI is making up something that is not true. However, in cases like Reddit-sourced answers, the AI didn’t lie—it simply took the information provided by its sources at face value.

Thanks to a Reddit comment that’s more than a decade old, Google’s AI reportedly said adding glue to cheese is a good way to keep it from sliding off a pizza.

Google AI overview suggests adding glue to get cheese to stick to pizza, and it turns out the source is an 11 year old Reddit comment from user F*cksmith 😂 pic.twitter.com/uDPAbsAKeO

— Peter Yang (@petergyang) May 23, 2024

OpenAI’s flagship AI model, ChatGPT, has a long history of making up facts, including wrongfully accusing law professor Jonathan Turley of sexual assault last April, citing a trip he did not take.

The AI’s overconfidence has apparently declared everything on the internet as real, laid blame for the debacle at the feet of a former Google exec, and adjudicated the company’s own guilt in the area of anti-trust law.

The feature has certainly sparked humor and bemusement as users pepper Google with pop-culture queries.

But the recommended daily allowance for rock intake by children has been updated, instead noting that “curiosity, sensory processing difficulties, or an eating disorder” could be responsible for such a diet.

- Video of Diddy assaulting his ex-girlfriend, Cassie surfaces amid rapper’s legal issues

- For rent: 2 bedroom Block Of Flats Ilaje Ajah Lagos (PID: 8PBKHG)

- Three Officers Killed, Five Wounded Trying to Serve Warrant

- Canada’s assisted dying regime should not be expanded to include children | Opinions

- Doyin Okupe: Obi’s former campaign man makes u-turn, backs Tinubu

- Argentina face Australia in friendly